Embodied Code

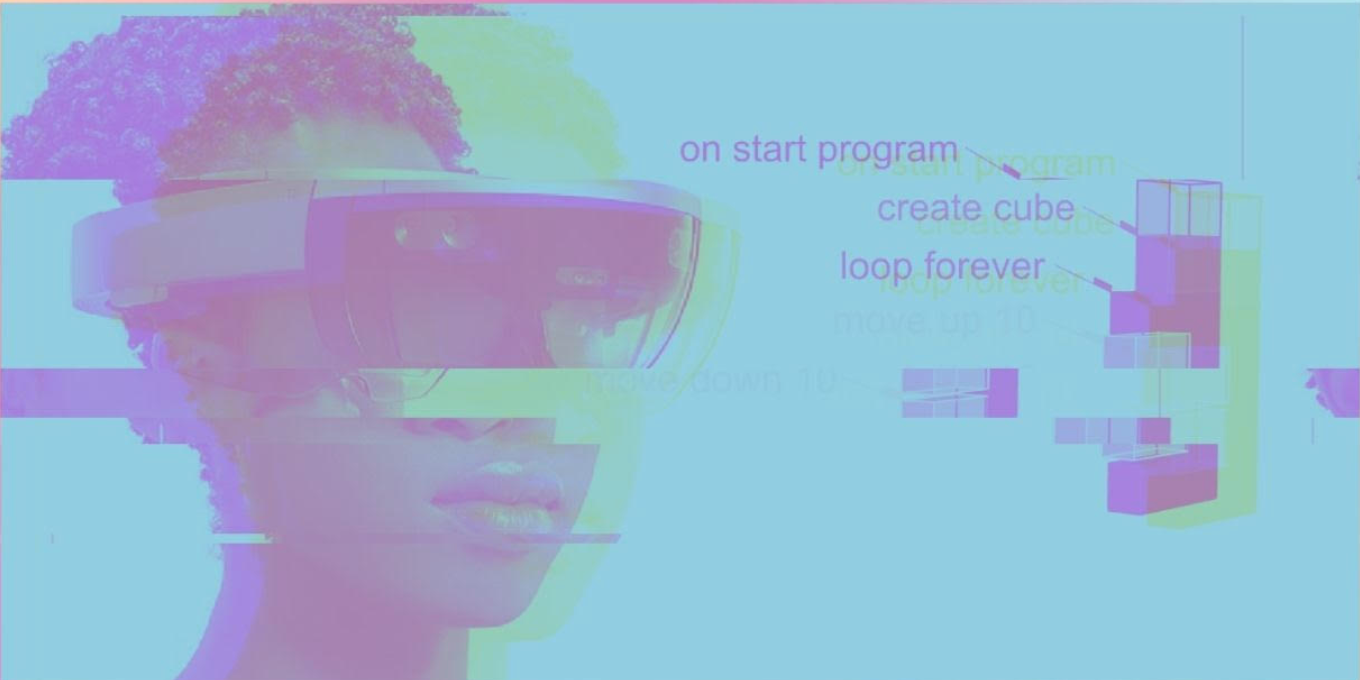

A Platform for Embodied Coding in Virtual and Augmented Reality

| Overview | Documentation | Video | Publications | Team | Web Editor |

Overview

The increasing sophistication and availability of Augmented and Virtual Reality (AR/VR) technologies wield the potential to transform how we teach and learn computational concepts and coding. This project develops a platform for creative coding in virtual and augmented reality. The Embodied Coding Environment (ECE) is a node-based system developed in the Unity game engine. It is conceptualized as a merged digital/physical workspace where spatial representations of code, the visual outputs of the code, and user editing histories are co-located in a virtual 3D space.

It has been theorized that learners’ abilities to understand and reason about functions, algorithms, conditionals, and other abstract computational concepts stem in part from more fundamental sensori-motor and perceptual experiences of the physical world. Our own work, for instance, has revealed that computer science (CS) educators incorporate a wide range of metaphors grounded in tangible experience into their lessons on computational concepts, such as demonstrating sorting algorithms with a deck of cards or the transfer of information between functions by throwing paper airplanes. Our long-term research aims center on the question of how a coding platform that supports these types of embodied conceptual phenomena can make learning to code become a more intuitive process.

Getting Started

Follow our Getting Started Guide to run the app on you headset.

Video

Video Documentation for SIGCSE 2024. Video by Reid Brockmeier and Kylie Muller.

Workshops, Presentations, Papers

- Lay, R.; Bhutada, R.; Lobo, A.; Twomey, R.; Eguchi, A.; Wu, Y.; “Embodied Code: Creative Coding in Virtual Reality”, SIGCSE TS 2024 (55th ACM Technical Symposium on Computer Science Education)

- Wu, Y. C., Sharkey, T., and Wood, T. (2023). “Designing the embodied coding environment: A platform inspired by educators and learners.” (https://circls.org/partnerships-for-change) CIRCLS Rapid Community Report

- “An Immersive Environment for Embodied Code”, 2022 ACM Conference on Human Factors in Computing Systems, CHI’22 (interactivity workshop and extended abstract)

- “Need Finding for an Embodied Coding Platform: Educators’ Practices and Perspectives”, 14th International Conference on Computer Supported Education, CSEDU 2022 (paper)

- San Diego Computer Science Teachers Association, November 18, 2021 (demo)

- UCSD Design Innovation Building Dedication, November 18, 2021 (demo)

- Exploring Virtual Reality and Embodied Computational Reasoning 17th annual ACM International Computing Education Research Conference, ICER 2021, Saturday August 14, 2021 (workshop)

- Embodied Coding in Augmented Reality, 2021 NSF STEM for All Video Showcase (video)

- “How are computational concepts learned and taught? A thematic analysis study informing the design of an Augmented Reality coding platform”, 13th International Conference on Computer Supported Education, CSEDU 2021, 23-25 April, 2021 (poster and abstract)

- Study: Spring Break Research Experience, UC San Diego, March 2021

Team

- Ying Wu, PI insight.ucsd.edu

- Robert Twomey, co-PI roberttwomey.com

- Monica Sweet, Investigator UCSD CREATE

- Amy Eguchi, Investigator UCSD EDS

- Rhea Bhutada

- Alejandro Lobo

- Ryan Lay

- Reid Brockmeier

- Kylie Muller

Alumni

- Timothy Wood, Postdoc fishuyo.com

- Tommy Sharkey, Grad Researcher tlsharkey.com

Contact

To learn more, contact PI Ying Wu at ycwu@ucsd.edu

Participating Labs

Support

This work is supported by the National Science Foundation under Grant #2017042